Performing general language-conditioned bimanual manipulation tasks is of great importance for many applications ranging from household service to industrial assembly. However, collecting bimanual manipulation data is expensive due to the high-dimensional action space, which poses challenges for conventional methods to handle general bimanual manipulation tasks. In contrast, unimanual policy has recently demonstrated impressive generalizability across a wide range of tasks because of scaled model parameters and training data, which can provide sharable manipulation knowledge for bimanual systems. To this end, we propose a plug-and-play method named AnyBimanual, which transfers pretrained unimanual policy to general bimanual manipulation policy with few bimanual demonstrations. Specifically, we first introduce a skill manager to dynamically schedule the skill representations discovered from pretrained unimanual policy for bimanual manipulation tasks, which linearly combines skill primitives with task-oriented compensation to represent the bimanual manipulation instruction. To mitigate the observation discrepancy between unimanual and bimanual systems, we present a visual aligner to generate soft masks for visual embedding of the workspace, which aims to align visual input of unimanual policy model for each arm with those during pretraining stage. AnyBimanual shows superiority on 12 simulated tasks from RLBench2 with a sizable 12.67% improvement in success rate over previous methods. Experiments on 9 real-world tasks further verify its practicality with an average success rate of 84.62%.

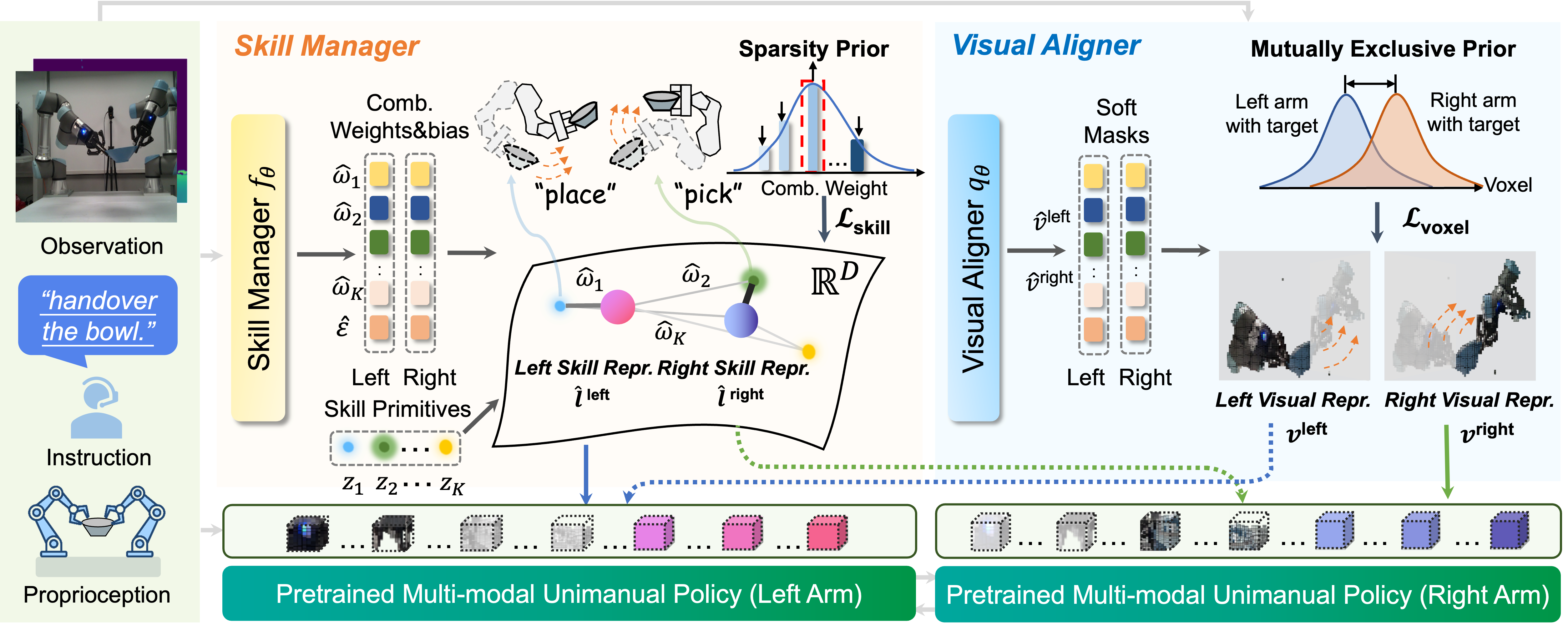

The overall pipeline of AnyBimanual, which primarily consists of a skill manager and a perception manager. The skill manager adaptively coordinates primitive skills for each robot arm, while the perception manager mitigates the distributional shift from unimanual to bimanual by decomposing the visual observation for each arm.

The simulated experiments are conducted in RLBench2. We transfer a well-recognized unimanual policy PerAct to bimanual policy with AnyBimanual, and find that it can complete 12 challenging bimanual tasks simultaneously.

(a) pick laptop

(b) pick plate

(c) sweep to dustpan

(d) lift ball

(e) push box

(f) lift tray

(g) take out tray

(h) press buttons

(i) handover item easy

(j) handover item

(k) straighten rope

(l) put bottle in fridge

The real-world experiments are performed in a tabletop setup with objects randomized in location every episode. AnyBimanual can simultaneously conduct 9 complex real-world bimanual manipulation tasks with one model.

We include multiple distractors in real-world experiments, and find AnyBimanual generalizes to these settings successfully by unlocking the commonsense held by unimanual base models.

The robot struggles to generalize to different backgrounds and object sizes, which leads to an out-of-distribution movement.

The robot fails to grasp the green block due to a small deviation, which may be caused by inconsistent data collection.

The low-level motion planner cannot handle the severe rotation predicted by the agent, which can result in a dangerous cup fall.

The author team would like to acknowledge Yuheng Zhou, Wenkai Guo, Jiarui Xu, Qianzhun Wang, Yongxuan Zhang, Zilai Wei, Haolei Bai from Nanyang Technological University for participating in the expert data collection and Xinyue Zhao from Nanyang Technological University for art design support.

@article{lu2024anybimanual,

title={AnyBimanual: Transferring Unimanual Policy for General Bimanual Manipulation},

author={Lu, Guanxing and Yu, Tengbo and Deng, Haoyuan and Chen, Season Si and Tang, Yansong and Wang, Ziwei},

journal={arXiv preprint arXiv:2412.06779},

year={2024}

}